Comparison with Baselines

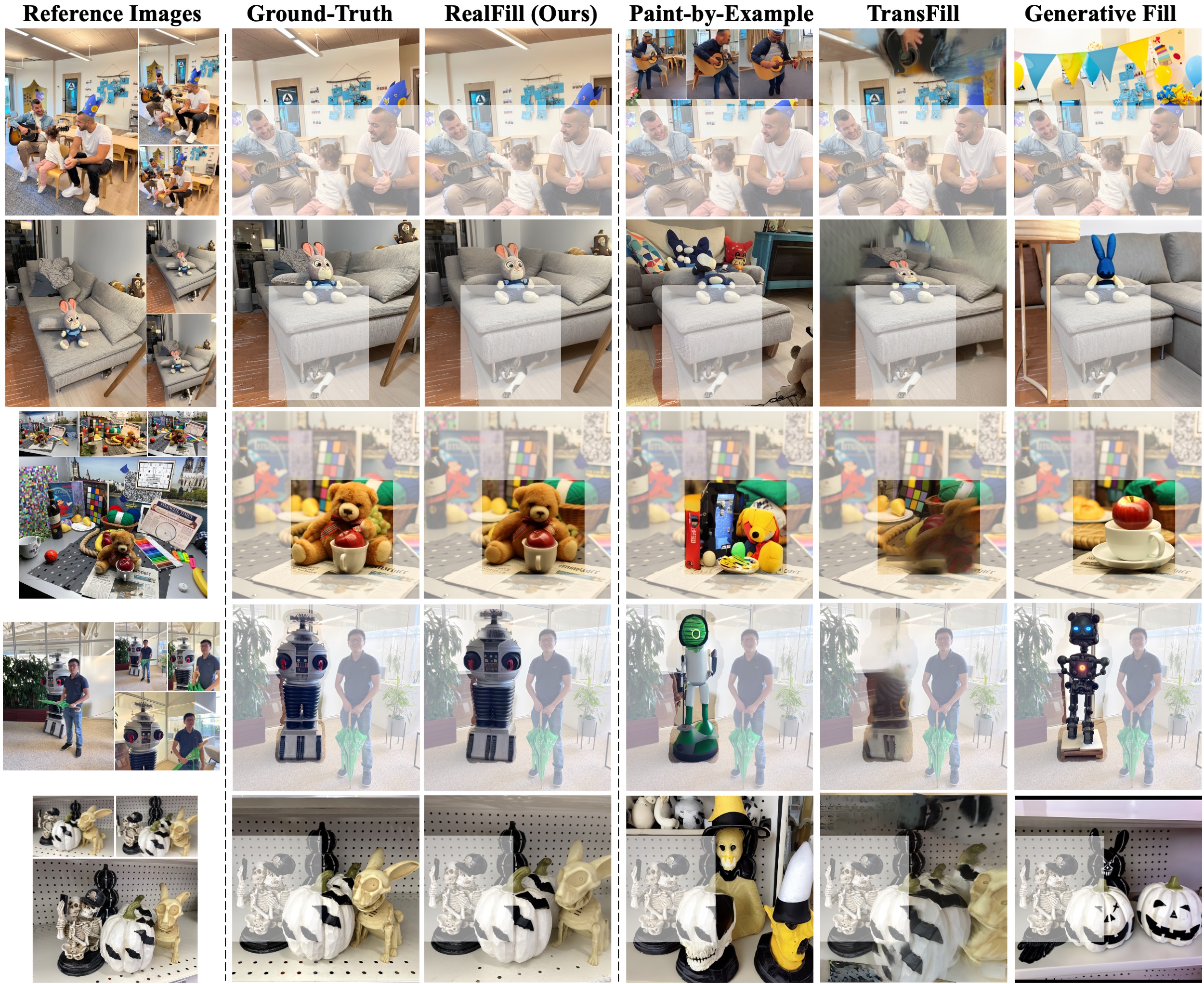

A comparison of RealFill and baseline methods. Transparent white masks are overlayed on the unaltered known regions of the target images.

- Paint-by-Example does not achieve high scene fidelity because it relies on CLIP embeddings, which only capture high-level semantic information.

- TransFill outputs low quality images due to the lack of a good image prior and the limitations of its geometry-based pipeline.

- Photoshop Generative Fill produces plausible results, they are inconsistent with the reference images because prompts have limited expressiveness.